Why this matters: debugging is now a distributed systems problem

In a single-process application running on your laptop, debugging usually means setting a breakpoint, inspecting variables, and stepping through code. In modern production environments—microservices, serverless, containers, multiple databases, queues, caches, third-party APIs—debugging is often less about one bug and more about reducing uncertainty across a system.

A typical failure might involve:

- A frontend request triggering multiple backend services

- A partial outage in a region

- A downstream dependency degrading (latency spikes rather than total failure)

- A race condition that shows up only under specific load

- A deployment introducing subtle behavioral changes

Traditional “printf debugging” and local stepping still matter, but they aren’t enough. To debug reliably in production, you need observability: the ability to ask new questions about the system without redeploying code.

This article is a practical guide to debugging and observability for developers and engineers. It covers:

- Debugging workflows that scale from local dev to production

- Logging, metrics, and distributed tracing with concrete examples

- Tool comparisons and how to choose what fits

- Debugging techniques for performance, concurrency, and distributed failures

- Best practices for keeping your system debuggable over time

A mental model: from symptoms to root cause

A productive debugging process is structured. A simple and effective loop:

- Triage: What is the impact? Is it still happening? What changed?

- Reproduce (or approximate): Can you reproduce locally, in staging, or via synthetic traffic?

- Collect signals: Logs, metrics, traces, profiles, core dumps, request samples.

- Form hypotheses: Based on evidence, not guesswork.

- Test hypotheses: Reduce the search space quickly.

- Fix: Patch, mitigate, or rollback.

- Validate: Ensure it’s resolved and won’t regress.

- Learn: Add guardrails—tests, alerts, dashboards, runbooks.

Senior engineers often differ from juniors not in raw debugging ability, but in how quickly they can:

- Narrow the search space

- Use system signals effectively

- Avoid “blind changes” that don’t address the cause

Observability improves steps 3–5 dramatically.

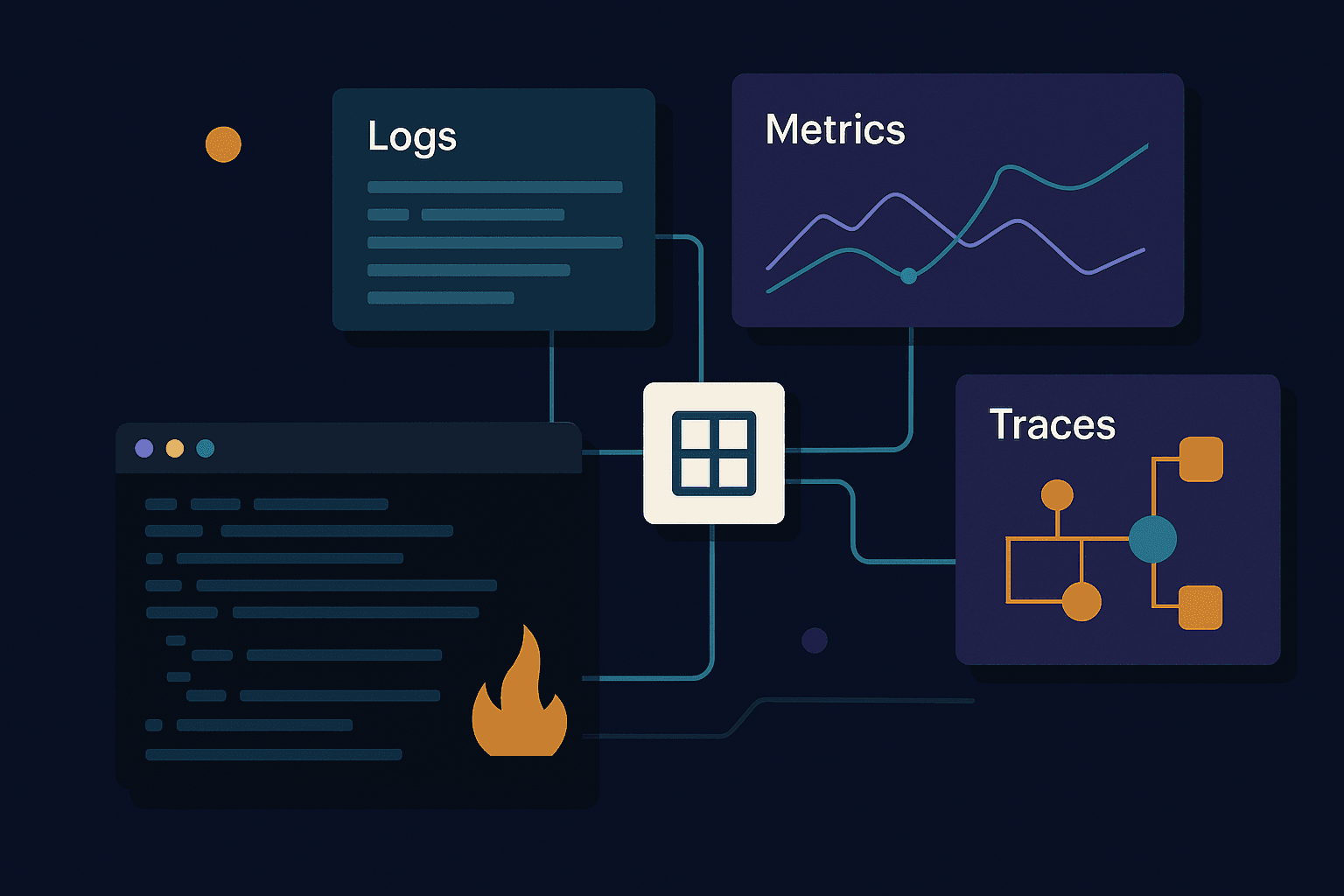

The three pillars of observability (and how to actually use them)

1) Logs: the narrative

Logs are discrete events—useful for answering questions like:

- What happened to request

X? - Why did this function take a fallback path?

- What error did a dependency return?

Structured logging (don’t ship plain strings)

Plain string logs are hard to query and correlate. Prefer structured logs (JSON) with consistent keys.

Node.js example (pino):

jsimport pino from "pino"; export const logger = pino({ level: process.env.LOG_LEVEL ?? "info", base: null, // don’t automatically add pid/hostname unless you want them }); // Example usage logger.info({ event: "user_login", userId: "u_123", method: "password", ip: "203.0.113.10", }, "User logged in");

Best fields to include in most logs:

timestamp(usually handled by logger)levelservice/appenv(prod/staging)region/azrequest_idortrace_id(critical)user_id(if applicable; be careful with privacy)event(short stable identifier)errorobject with stack trace

Correlation IDs: your first debugging superpower

If you can’t connect logs across services, you’re debugging blind. Use a request ID propagated through HTTP headers.

Common choices:

traceparent(W3C Trace Context) for tracingx-request-idfor log correlation

In practice, do both: use OpenTelemetry for tracing and include trace IDs in logs.

2) Metrics: the shape of the system

Metrics answer questions like:

- Is latency increasing overall?

- Which endpoints are failing?

- Is error rate correlated with a deploy?

- Are we saturating CPU/memory/IO?

Common metric types:

- Counter: monotonically increasing (e.g., request count)

- Gauge: current value (e.g., queue depth)

- Histogram/Summary: latency distributions

The RED method for services

For request-driven services, a great starting point is RED:

- Rate: requests per second

- Errors: error rate

- Duration: latency (p50/p95/p99)

Pair with the USE method for infrastructure:

- Utilization

- Saturation

- Errors

Example: Prometheus metrics in Go

goimport ( "net/http" "github.com/prometheus/client_golang/prometheus" "github.com/prometheus/client_golang/prometheus/promhttp" ) var ( reqCount = prometheus.NewCounterVec( prometheus.CounterOpts{Name: "http_requests_total"}, []string{"method", "path", "status"}, ) reqLatency = prometheus.NewHistogramVec( prometheus.HistogramOpts{ Name: "http_request_duration_seconds", Buckets: prometheus.DefBuckets, }, []string{"method", "path"}, ) ) func init() { prometheus.MustRegister(reqCount, reqLatency) } func instrumentedHandler(next http.Handler) http.Handler { return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) { // You’d wrap ResponseWriter to capture status in real code timer := prometheus.NewTimer(reqLatency.WithLabelValues(r.Method, r.URL.Path)) defer timer.ObserveDuration() next.ServeHTTP(w, r) reqCount.WithLabelValues(r.Method, r.URL.Path, "200").Inc() }) } func main() { http.Handle("/metrics", promhttp.Handler()) http.ListenAndServe(":8080", nil) }

Pitfall: Avoid high-cardinality labels (e.g., userId, orderId). They can blow up your metric storage.

3) Traces: the causal chain

Distributed traces connect a single request across many services, showing:

- Which service was slow

- Which database query dominated latency

- Where retries or timeouts occurred

Traces are often the fastest route to root cause for latency problems.

OpenTelemetry: the standard approach

OpenTelemetry (OTel) is the most common vendor-neutral instrumentation approach. Most vendors ingest OTel traces.

Node.js example with OpenTelemetry (high-level sketch):

jsimport { NodeSDK } from '@opentelemetry/sdk-node'; import { getNodeAutoInstrumentations } from '@opentelemetry/auto-instrumentations-node'; import { OTLPTraceExporter } from '@opentelemetry/exporter-trace-otlp-http'; const sdk = new NodeSDK({ traceExporter: new OTLPTraceExporter({ url: process.env.OTEL_EXPORTER_OTLP_ENDPOINT, }), instrumentations: [getNodeAutoInstrumentations()], }); sdk.start();

To correlate logs with traces, ensure your logger includes trace_id / span_id. Many log appenders can do this automatically once OTel context propagation is set up.

Choosing tools: practical comparisons

Tool choice depends on scale, team familiarity, and budget. A few common categories:

Logging

- ELK/EFK (Elasticsearch + Logstash/Fluentd + Kibana): highly flexible, heavy operational overhead.

- Loki + Grafana: cheaper indexing model (labels + raw logs), integrates well with Prometheus.

- Cloud-native: CloudWatch Logs, GCP Cloud Logging, Azure Monitor—easy adoption, vendor lock-in.

- Vendors: Datadog Logs, Splunk—powerful search and alerting, can be expensive.

Rule of thumb: If you’re already using Grafana/Prometheus, Loki is a common fit. If you need extremely powerful ad-hoc search at large scale and can pay for it, Splunk/Datadog are common.

Metrics

- Prometheus: standard for Kubernetes; pull-based; great ecosystem.

- Grafana Mimir / Thanos: long-term storage and global querying for Prometheus.

- Datadog / New Relic: easy managed experience, strong UX.

Tracing

- Jaeger: open-source tracing; good baseline.

- Tempo: Grafana’s tracing backend; often paired with Loki/Mimir.

- Datadog APM / New Relic / Honeycomb: excellent product experiences.

Honeycomb note: particularly strong for high-cardinality event-based analysis, which can shorten debugging loops.

Debugging workflows by environment

Local debugging

Local debugging is about fast iteration.

Best practices:

- Use a debugger (breakpoints, watch expressions).

- Add unit tests reproducing the bug.

- Use deterministic seeds for randomness.

- Use a local replica of dependencies (Docker Compose).

Example Docker Compose for a service + Postgres + Redis:

yamlservices: api: build: . ports: ["3000:3000"] environment: DATABASE_URL: postgres://postgres:postgres@db:5432/app REDIS_URL: redis://cache:6379 depends_on: [db, cache] db: image: postgres:16 environment: POSTGRES_PASSWORD: postgres ports: ["5432:5432"] cache: image: redis:7 ports: ["6379:6379"]

Staging debugging

Staging is where you validate system behavior under realistic conditions.

What staging should have to be useful:

- Same deployment process as prod

- Similar config (feature flags, timeouts)

- Synthetic traffic / load tests

- Observability enabled (don’t treat it as a prod-only concern)

Production debugging

In production, prioritize safety and speed.

A safe order of operations:

- Confirm blast radius (which users/regions)

- Check recent changes (deploys, config, dependency status)

- Use dashboards (rate/errors/latency)

- Trace representative slow/error requests

- Use logs to confirm hypotheses

- Mitigate (rollback, disable feature flag, shed load)

- Then fix forward

Practical incident debugging: an end-to-end example

Scenario: users report “checkout is slow” and sometimes fails.

Step 1: Metrics first

You check a dashboard:

checkoutendpoint p95 jumped from 400ms to 4s- Error rate increased from 0.2% to 3%

- CPU is normal, but DB connection pool is saturated

This suggests the service isn’t CPU-bound; it’s waiting.

Step 2: Traces to find the bottleneck

A trace shows:

CheckoutServicespan: 4.2sPaymentServicespan: 3.9s- Inside

PaymentService, a call toFraudScoreAPItakes 3.7s - Retries are occurring

Now you know where latency is introduced.

Step 3: Logs to understand why

Query logs for PaymentService filtered by trace_id from a slow request.

You find:

FraudScoreAPI timeoutmessages- A fallback path is disabled due to a config change

Step 4: Mitigate

- Re-enable fallback via feature flag

- Reduce timeout and retry budget to prevent pile-ups

- Consider circuit breaker behavior

Step 5: Validate and harden

- Add an alert: high p95 latency for FraudScoreAPI dependency

- Add dashboard panels: dependency latency + retry counts

- Add a load test in staging that simulates slow FraudScoreAPI responses

Debugging techniques that save hours

1) Minimize the search space with binary questions

Ask questions that split possibilities:

- Is it all endpoints or one?

- All regions or one?

- Only new deployments?

- Only certain users/tenants?

Each question narrows the problem faster than random log searching.

2) Use “diff debugging”

Compare:

- Before/after deploy

- Healthy vs unhealthy region

- Requests that succeed vs fail

A diff often reveals the key.

3) Feature flags as debugging tools

Feature flags aren’t just product tools—they’re operational tools.

Use them for:

- Rapid mitigation

- A/B testing performance improvements

- Safely enabling verbose logging for a subset of traffic

Caution: Flag complexity can become technical debt; document and prune.

4) Sampling for high-volume systems

Logging everything can be costly and noisy. Use:

- Head-based sampling (sample at ingress)

- Tail-based sampling (sample slow/error traces)

Tail-based sampling is especially effective: keep what you’ll need for debugging.

Performance debugging: profiles, flame graphs, and latency budgets

When latency or CPU usage spikes, don’t guess—profile.

Profiling options

- CPU profiling: find hot functions

- Heap profiling: memory leaks, excessive allocations

- Block/Mutex profiling: lock contention

- IO profiling: slow disk/network calls

Go: pprof

In Go services, net/http/pprof is a standard approach.

goimport _ "net/http/pprof" // expose on a separate port in production go func() { http.ListenAndServe("localhost:6060", nil) }()

Then:

bashgo tool pprof http://localhost:6060/debug/pprof/profile?seconds=30

Use the flame graph view (or top) to locate hotspots.

Java: JFR (Java Flight Recorder)

JFR is excellent for production-safe profiling, especially on the JVM.

Linux: eBPF-based tools

Tools like bcc, bpftrace, and vendor solutions (Pixie, Parca, Datadog Universal Service Monitoring) can profile and trace without invasive code changes.

When to use eBPF:

- You suspect kernel/network issues

- You need system-wide visibility

- You can’t easily redeploy instrumentation

Concurrency and distributed failure patterns

Race conditions and heisenbugs

Symptoms:

- Failures disappear when logging is added

- Only occurs under load

- Non-deterministic test failures

Techniques:

- Add stress tests

- Use deterministic schedulers when available

- Enable race detectors (e.g.,

go test -race) - Reduce shared mutable state

Retries: the silent outage multiplier

Retries can amplify load on struggling dependencies.

Best practices:

- Use exponential backoff + jitter

- Set a retry budget (don’t retry forever)

- Respect idempotency

- Prefer timeouts + circuit breakers

Circuit breakers and bulkheads

- Circuit breaker: stop calling a failing dependency temporarily

- Bulkhead: isolate resource pools so one dependency can’t starve others

These patterns reduce cascading failures and make incidents easier to debug.

Debugging in Kubernetes: what you can do safely

Kubernetes adds layers: pods, nodes, networking, sidecars.

First checks

kubectl get pods -n <ns>: restarts? crash loops?kubectl describe pod ...: events, OOMKilled, image pull issueskubectl logs ... --previous: logs from crashed container

Ephemeral containers for live debugging

Ephemeral containers allow you to attach debug tools without rebuilding images.

bashkubectl debug -n myns pod/my-pod -it --image=busybox --target=my-container

Use this to:

- Inspect filesystem

- Run

nslookup,curl,tcpdump(with the right image) - Validate env vars and mounted secrets

Security note: Restrict who can do this; it’s powerful.

Defensive coding for debuggability

Debuggability is a design property. You can bake it in.

1) Explicit errors with context

Prefer error wrapping with context.

Go example:

goif err != nil { return fmt.Errorf("fetch user %s: %w", userID, err) }

This produces stackable context for logs and traces.

2) Timeouts everywhere

Most “hung” systems are missing timeouts.

- HTTP client timeouts

- DB query timeouts

- Queue consumer visibility timeouts

Timeouts make failures observable and bounded.

3) Idempotency keys

For payment/checkout-like systems, idempotency keys prevent duplicate side effects and make retry behavior safe.

4) Health checks that reflect reality

- Liveness: is the process running?

- Readiness: can it handle traffic now?

If readiness doesn’t reflect dependency health, Kubernetes may route traffic to a broken instance.

Alerting that doesn’t burn out your team

Bad alerting causes alert fatigue, which increases downtime.

Principles

- Alert on symptoms, not causes

- Page only when user impact is likely

- Use multi-window, multi-burn-rate alerts for SLOs

SLO-based alerting

Instead of alerting on “CPU > 80%”, alert on “error budget burn rate”.

If your SLO is 99.9% successful requests, you can compute burn rate and page when you’re burning budget too fast.

This aligns alerts to user experience and reduces noise.

Debugging checklists (copy/paste for your runbooks)

Production latency spike checklist

- Is it global or isolated (region/az/tenant)?

- Did a deploy or config change occur?

- Which endpoint(s) and which status codes?

- Traces: which span dominates latency?

- Dependency dashboards: DB, cache, external APIs

- Retry rate increased?

- Connection pools saturated?

- Mitigation: rollback, disable feature, increase capacity, circuit breaker

Elevated error rate checklist

- Error types: timeouts vs 5xx vs 4xx

- Which component emits the error?

- Correlate errors with deploys

- Check logs for a representative trace/request ID

- Validate credentials/secrets rotation events

- Validate DNS and networking

Best practices summary

- Instrument early: add logs/metrics/traces before you need them.

- Correlate everything: propagate trace IDs and request IDs.

- Prefer structured logs: stable fields beat ad-hoc strings.

- Avoid high cardinality metrics: use traces/logs for per-entity detail.

- Use traces for latency: they reveal the critical path.

- Profile for performance: flame graphs beat intuition.

- Design for failure: timeouts, retries with budgets, circuit breakers.

- Runbooks + postmortems: convert incidents into system improvements.

A note about the topic input

The provided topic was "[object Object]", which looks like a JavaScript object accidentally stringified in a prompt. If you share the intended topic (e.g., “Kafka consumer debugging”, “React performance profiling”, “Kubernetes networking”, etc.), I can rewrite this article to target it precisely while keeping the same depth and structure.